AI Megathread

-

@Sage

People are out here hitting people in the head with the hammer, so at the moment I am trying to establish common ground that we can all agree on, like “hitting people in the head with the hammer is bad”.I recognize the debate on whether the hammer itself is bad or not is more nuanced. I think we can all agree slugging people in the head with the hammer is probably wrong, regardless of whether the hammer is made of fair trade rubber or blood diamonds.

-

@Sage said in AI Megathread:

Yes, but in this case what I am referring to is whether ChatGPT is, in and of itself, plagiarism.

If I write something in a paper, and don’t cite where I found that information, that’s treated as plagiarism - even if that work is myself from a previous writing. Because I’m taking their idea, without giving them credit for it.

Plagiarising is defined thusly: “to steal and pass off (the ideas or words of another) as one’s own : use (another’s production) without crediting the source”(Merriam-Webster, 2023).

Therefore, given the that ChatGPT can’t create its own ideas (Thorp, 2023) or synthesise information without many errors (Park et al., 2023), I would argue that it does plagiarise, by definition.

References

Merriam-Webster. (2023, August 9). Definition of plagiarizing. Merriam-Webster.com. https://www.merriam-webster.com/dictionary/plagiarizing

Thorp, H. H. (2023). ChatGPT is fun, but not an author. Science, 379(6630), 313–313. https://doi.org/10.1126/science.adg7879

Park, Y. J., Kaplan, D. M., Ren, Z., Hsu, C.-W., Li, C., Xu, H., Li, S., & Li, J. (2023). Can ChatGPT be used to generate scientific hypotheses? ArXiv (Cornell University). https://doi.org/10.48550/arxiv.2304.12208

-

@Sage said in AI Megathread:

I’m just stating that I think the use of the word ‘plagiarism’ is probably not correct in this case.

In the case where I said that ChatGPT is “plagiarizing itself”, that was meant to be tongue-in-cheek. You can’t, by definition, plagiarize yourself.

But in the broader sense of “is what ChatGPT does plagiarism”, I disagree for the same reasons Pavel cited here:

@Pavel said in AI Megathread:

Therefore, given the that ChatGPT can’t create its own ideas (Thorp, 2023) or synthesise information without many errors (Park et al., 2023), I would argue that it does plagiarise, by definition.

We can quibble about the exact lines between plagiarism, copyright infringement, trademark infringement, etc. but it’s just semantics. Fundamentally it’s all about profiting off the work of others without proper attribution, permission, and compensation. Even if a million courts said it was legal (which I highly doubt but we’ll see), you would still not convince me that it wasn’t wrong.

-

@Faraday said in AI Megathread:

You can’t, by definition, plagiarize yourself

Technically you can, though it’s less about “using someone else’s ideas or words” and more about using pre-existing work without acknowledgement. It’s a silly name, as far as I’m concerned, but it’s a thing.

-

@Pavel said in AI Megathread:

If I write something in a paper, and don’t cite where I found that information, that’s treated as plagiarism.

By your reasoning, if I say “George Washington was the first president of the United States of America” then it is plagiarism, despite the fact that this is simply something I know. Yes, I did originally learn it from another source, but since I have not stated the source, that is plagiarism.

No, that is simply poor referencing.

Yes, I’m aware that there are professors out there who will decry that as ‘plagiarism’, but guess what? Professors don’t actually get to decide the definition of words beyond the boundaries of their classrooms, and they really need to get over it, because with such a definition nearly everything they produce is also rife with ‘plagiarism’ (but of course since no student would ever dare call them out on this fact they remain relatively insulated from such a fact).

After all, you must have read the phrase ‘If I write something in a paper’, or something close which gave you the idea, and you didn’t cite that, did you? How about the concept of a citation? You forgot to include where that came from, so does that mean you are passing it off as ‘your own’?

The idea that not citing yourself being plagiarism is clearly ludicrous because, as your definition shows, the ideas or words need to originate with another person for it to be plagiarism, yet, yes, those professors will mark it as plagiarism (while not properly citing themselves in most of their handouts to their students).

What those professors, and you by extension, are doing is playing the role of Humpty Dumpty in Lewis Caroll’s “Through the Looking Glass”;

‘When I use a word,’ Humpty Dumpty said in rather a scornful tone, `it means just what I choose it to mean–neither more nor less.’

-

@Pavel said in AI Megathread:

Technically you can

No, you can’t. You can self-plagiarize, but you cannot plagiarize yourself. As a compound word self-plagiarize has its own meaning.

A pineapple is not related to pine or apple trees, after all.

-

@Sage I’m going to assume your hostile tone isn’t intentional.

All it comes down to, whatever your semantics may be, is an ethical code. That is to say a code imposed by an authority or administrative body of some kind. That code defines the words as far as it’s concerned. So plagiarism for me is different to you because I habitually operate under a different ethical code to you. It’s a perspective issue.

And ultimately that’s what is likely to happen vis a vis ChatGPT and other such artful devices: Some authority will impose an ethical code on its use (many universities are now requiring its use to be cited and indicated on the title page of the paper, for instance), and will define whatever terms it wants. Ludicrous or not.

-

@Pavel Hostility was not intended. Instead I was mainly trying to show that the definition that most professors attempt to apply is not only nonsensical, it is also hypocritical.

And yes, the authority does get to define the code as far as it is concerned. That is basically what I stated. I will even agree that they not only get to, but they often need to because words tend to not have absolutely agreed upon definitions (whether Pluto is/was a planet is a good example of the need for specific definition in certain contexts).

However, we are not talking about ‘as far as they are concerned’. ChatGPT is not one of their students, so their definition does not apply as to whether ChatGPT should generally be considered to be plagiarizing.

After all, if the ones in charge decided, for some absurd reason, to call a lack of proper citations ‘murder’ you would not expect the police to arrest students and for them to be tried, would you? Yet I don’t think either of us would argue that they couldn’t call it that, just that it would be foolish for them to do so.

I agree that academic authorities absolutely can, and even should, include rules for its use (or banning its use) in their codes. Again, that is not my argument in the slightest (just as it is not my argument that OpenAI should be free to load whatever material they chose into their models)

My argument is purely as to whether ChatGPT can, itself, be considered to be plagiarizing according to the commonly accepted definitions of plagiarism.

-

@Sage said in AI Megathread:

My argument is purely as to whether ChatGPT can, itself, be considered to be plagiarizing according to the commonly accepted definitions of plagiarism.

I would say both yes and no. It doesn’t have intention, so it doesn’t plagiarise per se. But those who use it have intent, and given that they quite obviously are using other peoples’ work without giving due credit, then it would be plagiarism.

Citing sources isn’t just to give proper credit. It’s to give readers somewhere to look whenever you use information that isn’t found within whatever they’re currently reading. It isn’t only the copying of others’ work that plagiarism stands against, but ensuring the capacity for information transfer and learning.

For instance, using your example of Georgie Washers being the first president of the United States. Someone is going to come across that piece of information, today, for the first time. Not here, sure, but somewhere. The idea of citing that information is to give people somewhere else to look in order to find out more.

But that’s more an interesting tid-bit than anything of relevance.

ChatGPT uses other peoples’ work to produce ‘new’ work, without providing credit or reference to those other works. But it’s also not a being with intentionality. So maybe it can plagiarise, but anyone who uses it in any setting that acknowledges the idea of plagiarism is plagiarising.

ETA:

@Sage said in AI Megathread:

Instead I was mainly trying to show that the definition that most professors attempt to apply is not only nonsensical, it is also hypocritical.

This might be true some places, but in my academic experience the application of the definition is usually thus: If you provide a pertinent fact that the current paper you’re writing doesn’t demonstrate to be true (or prove, in laymen’s terms), then you have to cite either where you learned that fact or a reliable source that does demonstrate it to be true.

Self-plagiarism (which is plagiarising yourself, according to Dictionary.com) is mostly considered bad because it’s just rehashing the same thing you’ve said before and not giving anything new. Especially for students, wherein written work is supposed to assess what you know etc. I think it’s a stupid name but not a terrible concept.

-

@Faraday said in AI Megathread:

This ties in with something that I think most folks don’t realize about AI. It’s not ACTUALLY generating something original. Two people on different computers using the same prompt with the same seed value(*) will get the EXACT SAME response - word-for-word, pixel-for-pixel. This is one reason why AI-generated works can’t themselves be copyrighted.

Technical caveat: depending on the settings, this isn’t always true. Some setups or techniques will make outputs non-reproducible (or more accurately, practically non-reproducible unless you can replicate the entire state of the machine at the time of image creation, including hardware). Early xformers library for SD was an example of this. Interestingly, techniques that cause this problem (you want reproducibility because determinism improves your results) are performance-enhancing ones; xformers is an NVIDIA optimization. Performance is always going to be desirable, so it’s not unclear that branches of development might not pursue this kind of technology.

It will be interesting to see how the legal stuff shakes out in the long term, because I don’t see that this division is clear cut. If you replicated an artist’s exact steps, you could also replicate their art pixel-for-pixel. It’s impractical for a painter, sure: we’re bad at fluid dynamics, so this would be akin to knowing the exact hardware state above. But there are plenty of all-digital artists nowadays. Replicating PS work is trivial. In fact, the software is already keeping a record of the steps required to generate the pixels, and you can step back and forth through them with undo-redo. Isn’t that the same thing?

At what point is the # of steps taken by an ‘AI artist’ to generate a

uniqueinteresting result sufficient to represent ‘creativity’? -

@bored said in AI Megathread:

At what point is the # of steps taken by an ‘AI artist’ to generate a unique result sufficient to represent ‘creativity’?

when the steps include not using automated image generation and making the art themselves

-

@bored said in AI Megathread:

or more accurately, practically non-reproducible unless you can replicate the entire state of the machine at the time of image creation, including hardware

When you say the entire hardware state, is that meaning things like the exact composition of the silicon in a chip, or is it more macro-scale, like having the same graphics card?

-

@Pavel said in AI Megathread:

Citing sources isn’t just to give proper credit. It’s to give readers somewhere to look whenever you use information that isn’t found within whatever they’re currently reading.

Absolutely, and if you want to criticize ChatGPT for not proving links as to where it got the information I wouldn’t argue against that (though it isn’t clear to me if the LLM is even capable of doing that given how it constructs its replies).

It isn’t only the copying of others’ work that plagiarism stands against, but ensuring the capacity for information transfer and learning.

I think that’s the crux of the problem. The commonly accepted definition of plagiarism is very much concerned with the copying of other people’s work. Its etymological roots even come from the latin word for kidnapping.

In academia, however, the definition and purpose have changed over time to the point where issues such as citation are falling within its purview in those specific circles. As this occurs the academic definition drifts further and further from the commonly accepted one, and this becomes problematic.

That’s all sort of beside the point, however, because for this discussion we are not talking about an academic paper or a student. We are talking about the term as it is generally applied.

But it’s also not a being with intentionality.

I would definitely argue that a machine’s lack of intentionality is not the reasoning behind my thinking. I could quite definitely construct a program whose output I feel would meet the commonly accepted definition of plagiarism (feed in a block of text and the program swaps out words for synonyms). I just don’t think the LLM, as has been described, meets such a definition.

N.B.: I will add that I could be wrong due to a misunderstanding of the LLM, either from a mistake on my part or untrue statements on the part of OpenAI, but then we get into another whole Pandora’s box of how we ‘know things’. I also agree completely that students using it without attribution are guilty of plagiarism themselves

I think it’s a stupid name but not a terrible concept.

Agreed on both points. There’s quite definitely a reason for the existence of such a term, it’s just that the term should not really lead to the implication that it does, because of the general definitions involved.

It is sort of like if people used the term ‘self-kidnapping’ for when someone takes a sick day even though they are not really ill.

I think at the end of the day we probably have more in common with our feeling of ChatGPT than our differences. It is just that I am somewhat opposed to people simply stating that ‘ChatGPT plagiarizes’ without at least providing more context (such as the specific use of plagiarism in this instance).

Ironically, this is because of, as you put it, ‘information transfer and learning’. Without the context it is too likely that the average person will read the sentence and assume it to mean that ChatGPT is functioning like my theoretical program, copying large blocks of text and merely swapping around some words a bit without acknowledgement given to the original writer, as opposed to them understanding that what you are saying is that it does not provide references to where its information has come from.

-

@bored If you replicate an artist’s exact steps with paint and brush on canvas, you still wouldn’t have an original work. You’d have a copy. It wouldn’t be original at all. Indeed, it’s one of the ways the Renaissance painters trained their apprentices. There are paintings which were collaborative efforts and those where it is not clear who actually did the work.

But paintings are not done in pixels. Really, most original digital works only consist of pixels in that they are a model of paint. The value of digital art comes not in its representations on storage media but in the original idea expressed by the artist. In this way, they are just like paintings in that only the one who put the original composition together gets credit for creativity.

Image generating AIs are essentially creating collages of other works, works that they largely are not licensed to use. And while collage can have value as an art form, the fact that something is a collage is part of its acknowledgement as a creative work.

-

@Sage said in AI Megathread:

Ironically, this is because of, as you put it, ‘information transfer and learning’. Without the context it is too likely that the average person will read the sentence and assume it to mean that ChatGPT is functioning like my theoretical program, copying large blocks of text and merely swapping around some words a bit without acknowledgement given to the original writer, as opposed to them understanding that what you are saying is that it does not provide references to where its information has come from.

Are you suggesting that ChatGPT does not just regurgitate paragraphs it finds on the internet? Because it certainly does. Ask @Faraday about how it just vomits up stuff from her website when asked about Ares.

-

@Tributary said in AI Megathread:

@Sage said in AI Megathread:

Ironically, this is because of, as you put it, ‘information transfer and learning’. Without the context it is too likely that the average person will read the sentence and assume it to mean that ChatGPT is functioning like my theoretical program, copying large blocks of text and merely swapping around some words a bit without acknowledgement given to the original writer, as opposed to them understanding that what you are saying is that it does not provide references to where its information has come from.

Are you suggesting that ChatGPT does not just regurgitate paragraphs it finds on the internet? Because it certainly does. Ask @Faraday about how it just vomits up stuff from her website when asked about Ares.

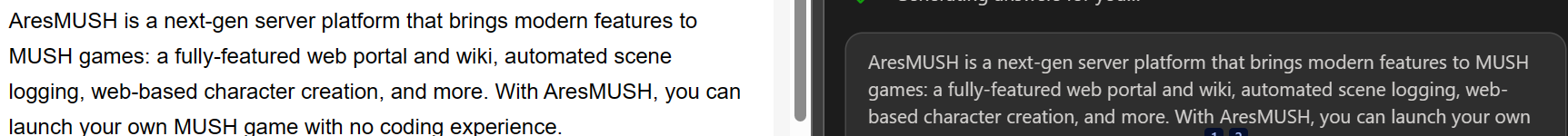

Ares site on the left, GPT via Bing on the right.

-

@Sage said in AI Megathread:

It is just that I am somewhat opposed to people simply stating that ‘ChatGPT plagiarizes’ without at least providing more context

Well, yes. That’s why I was arguing that ChatGPT cannot plagiarise as that requires intent. Like the difference between saying something that isn’t true versus lying. I believe intent matters when talking about plagiarism, which is why I say that people using ChatGPT are plagiarising, but ChatGPT itself isn’t.

BUT if we grant some casual intentionality to GPT and its cousins, for the sake of a more rudimentary understanding, then yes it plagiarises. It takes the work of others, mixes and messes with things, and spits it out again as seen in the above example. It cannot figure things out, make logical guesses, or have creative thought. There’s no way for it to tell you anything without someone else having told it first.

-

@Sage said in AI Megathread:

By your reasoning, if I say “George Washington was the first president of the United States of America” then it is plagiarism, despite the fact that this is simply something I know. Yes, I did originally learn it from another source, but since I have not stated the source, that is plagiarism.

That is not remotely what I said.

Saying that “D-Day happened on June 6, 1944” is not plagiarism. Regurgitating paragraphs with near-identical wording in sufficient quantities is. That is what I’m saying ChatGPT does at its core. It is not understanding and then restating its own understanding of facts like a human would. It is assembling words using an algorithmic autocomplete that’s asking “what’s the statistically most likely word to come next”. And how does it know what’s most likely to come next? From existing writings it has ingested.

@Pavel said in AI Megathread:

I believe intent matters when talking about plagiarism,

Yeah at some point we can get bogged down in semantics. A plagiarism detector tool isn’t detecting intent merely judging the output, yet that’s the word we use for it.

-

@Faraday said in AI Megathread:

Yeah at some point we can get bogged down in semantics.

One of many things linguists and computer scientists have in common.

-

@Trashcan Don’t really know about Bing and it’s implementation. What I do know is this:

completion = openai.ChatCompletion.create( model="gpt-3.5-turbo-16k", messages = [ {"role": "user", "content": "What is AresMUSH" } ], temperature=1.25, max_tokens=1024, top_p=1, frequency_penalty=0, presence_penalty=0 ) d = completion.choices print(d)gives me the following result:

[<OpenAIObject at 0x113f32450> JSON: { "index": 0, "message": { "role": "assistant", "content": "AresMUSH is a text-based roleplaying game engine that allows users to create and \ run their own roleplay games online. It is built on the MUSH (Multi-User Shared \ Hallucination) platform, which is a type of virtual world or multi-user dungeon. \ AresMUSH provides features and tools for creating and managing game settings, \ characters, and storylines, and allows multiple players to interact with each \ other in real-time through text-based chat and roleplay. It provides a flexible \ and customizable framework for creating a wide range of roleplaying settings \ and experiences." }, "finish_reason": "stop" }]It could be that the results @Faraday is reporting is because it’s an older model, something to do with how they set the variables for the question, or maybe there’s some other aspect to Bing’s implementation. I don’t really know.

Please note, I am not accusing anyone of lying. I’m just showing my results.