AI Megathread

-

@Faraday said in AI Megathread:

This ties in with something that I think most folks don’t realize about AI. It’s not ACTUALLY generating something original. Two people on different computers using the same prompt with the same seed value(*) will get the EXACT SAME response - word-for-word, pixel-for-pixel. This is one reason why AI-generated works can’t themselves be copyrighted.

Technical caveat: depending on the settings, this isn’t always true. Some setups or techniques will make outputs non-reproducible (or more accurately, practically non-reproducible unless you can replicate the entire state of the machine at the time of image creation, including hardware). Early xformers library for SD was an example of this. Interestingly, techniques that cause this problem (you want reproducibility because determinism improves your results) are performance-enhancing ones; xformers is an NVIDIA optimization. Performance is always going to be desirable, so it’s not unclear that branches of development might not pursue this kind of technology.

It will be interesting to see how the legal stuff shakes out in the long term, because I don’t see that this division is clear cut. If you replicated an artist’s exact steps, you could also replicate their art pixel-for-pixel. It’s impractical for a painter, sure: we’re bad at fluid dynamics, so this would be akin to knowing the exact hardware state above. But there are plenty of all-digital artists nowadays. Replicating PS work is trivial. In fact, the software is already keeping a record of the steps required to generate the pixels, and you can step back and forth through them with undo-redo. Isn’t that the same thing?

At what point is the # of steps taken by an ‘AI artist’ to generate a

uniqueinteresting result sufficient to represent ‘creativity’? -

@bored said in AI Megathread:

At what point is the # of steps taken by an ‘AI artist’ to generate a unique result sufficient to represent ‘creativity’?

when the steps include not using automated image generation and making the art themselves

-

@bored said in AI Megathread:

or more accurately, practically non-reproducible unless you can replicate the entire state of the machine at the time of image creation, including hardware

When you say the entire hardware state, is that meaning things like the exact composition of the silicon in a chip, or is it more macro-scale, like having the same graphics card?

-

@Pavel said in AI Megathread:

Citing sources isn’t just to give proper credit. It’s to give readers somewhere to look whenever you use information that isn’t found within whatever they’re currently reading.

Absolutely, and if you want to criticize ChatGPT for not proving links as to where it got the information I wouldn’t argue against that (though it isn’t clear to me if the LLM is even capable of doing that given how it constructs its replies).

It isn’t only the copying of others’ work that plagiarism stands against, but ensuring the capacity for information transfer and learning.

I think that’s the crux of the problem. The commonly accepted definition of plagiarism is very much concerned with the copying of other people’s work. Its etymological roots even come from the latin word for kidnapping.

In academia, however, the definition and purpose have changed over time to the point where issues such as citation are falling within its purview in those specific circles. As this occurs the academic definition drifts further and further from the commonly accepted one, and this becomes problematic.

That’s all sort of beside the point, however, because for this discussion we are not talking about an academic paper or a student. We are talking about the term as it is generally applied.

But it’s also not a being with intentionality.

I would definitely argue that a machine’s lack of intentionality is not the reasoning behind my thinking. I could quite definitely construct a program whose output I feel would meet the commonly accepted definition of plagiarism (feed in a block of text and the program swaps out words for synonyms). I just don’t think the LLM, as has been described, meets such a definition.

N.B.: I will add that I could be wrong due to a misunderstanding of the LLM, either from a mistake on my part or untrue statements on the part of OpenAI, but then we get into another whole Pandora’s box of how we ‘know things’. I also agree completely that students using it without attribution are guilty of plagiarism themselves

I think it’s a stupid name but not a terrible concept.

Agreed on both points. There’s quite definitely a reason for the existence of such a term, it’s just that the term should not really lead to the implication that it does, because of the general definitions involved.

It is sort of like if people used the term ‘self-kidnapping’ for when someone takes a sick day even though they are not really ill.

I think at the end of the day we probably have more in common with our feeling of ChatGPT than our differences. It is just that I am somewhat opposed to people simply stating that ‘ChatGPT plagiarizes’ without at least providing more context (such as the specific use of plagiarism in this instance).

Ironically, this is because of, as you put it, ‘information transfer and learning’. Without the context it is too likely that the average person will read the sentence and assume it to mean that ChatGPT is functioning like my theoretical program, copying large blocks of text and merely swapping around some words a bit without acknowledgement given to the original writer, as opposed to them understanding that what you are saying is that it does not provide references to where its information has come from.

-

@bored If you replicate an artist’s exact steps with paint and brush on canvas, you still wouldn’t have an original work. You’d have a copy. It wouldn’t be original at all. Indeed, it’s one of the ways the Renaissance painters trained their apprentices. There are paintings which were collaborative efforts and those where it is not clear who actually did the work.

But paintings are not done in pixels. Really, most original digital works only consist of pixels in that they are a model of paint. The value of digital art comes not in its representations on storage media but in the original idea expressed by the artist. In this way, they are just like paintings in that only the one who put the original composition together gets credit for creativity.

Image generating AIs are essentially creating collages of other works, works that they largely are not licensed to use. And while collage can have value as an art form, the fact that something is a collage is part of its acknowledgement as a creative work.

-

@Sage said in AI Megathread:

Ironically, this is because of, as you put it, ‘information transfer and learning’. Without the context it is too likely that the average person will read the sentence and assume it to mean that ChatGPT is functioning like my theoretical program, copying large blocks of text and merely swapping around some words a bit without acknowledgement given to the original writer, as opposed to them understanding that what you are saying is that it does not provide references to where its information has come from.

Are you suggesting that ChatGPT does not just regurgitate paragraphs it finds on the internet? Because it certainly does. Ask @Faraday about how it just vomits up stuff from her website when asked about Ares.

-

@Tributary said in AI Megathread:

@Sage said in AI Megathread:

Ironically, this is because of, as you put it, ‘information transfer and learning’. Without the context it is too likely that the average person will read the sentence and assume it to mean that ChatGPT is functioning like my theoretical program, copying large blocks of text and merely swapping around some words a bit without acknowledgement given to the original writer, as opposed to them understanding that what you are saying is that it does not provide references to where its information has come from.

Are you suggesting that ChatGPT does not just regurgitate paragraphs it finds on the internet? Because it certainly does. Ask @Faraday about how it just vomits up stuff from her website when asked about Ares.

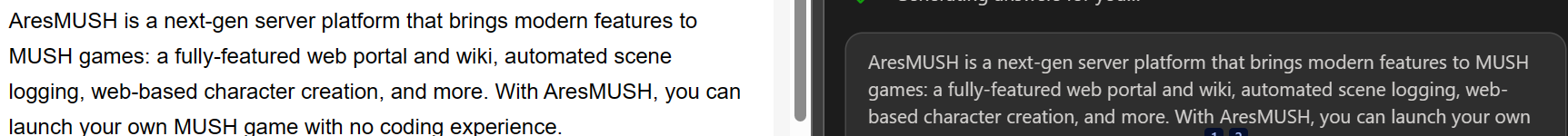

Ares site on the left, GPT via Bing on the right.

-

@Sage said in AI Megathread:

It is just that I am somewhat opposed to people simply stating that ‘ChatGPT plagiarizes’ without at least providing more context

Well, yes. That’s why I was arguing that ChatGPT cannot plagiarise as that requires intent. Like the difference between saying something that isn’t true versus lying. I believe intent matters when talking about plagiarism, which is why I say that people using ChatGPT are plagiarising, but ChatGPT itself isn’t.

BUT if we grant some casual intentionality to GPT and its cousins, for the sake of a more rudimentary understanding, then yes it plagiarises. It takes the work of others, mixes and messes with things, and spits it out again as seen in the above example. It cannot figure things out, make logical guesses, or have creative thought. There’s no way for it to tell you anything without someone else having told it first.

-

@Sage said in AI Megathread:

By your reasoning, if I say “George Washington was the first president of the United States of America” then it is plagiarism, despite the fact that this is simply something I know. Yes, I did originally learn it from another source, but since I have not stated the source, that is plagiarism.

That is not remotely what I said.

Saying that “D-Day happened on June 6, 1944” is not plagiarism. Regurgitating paragraphs with near-identical wording in sufficient quantities is. That is what I’m saying ChatGPT does at its core. It is not understanding and then restating its own understanding of facts like a human would. It is assembling words using an algorithmic autocomplete that’s asking “what’s the statistically most likely word to come next”. And how does it know what’s most likely to come next? From existing writings it has ingested.

@Pavel said in AI Megathread:

I believe intent matters when talking about plagiarism,

Yeah at some point we can get bogged down in semantics. A plagiarism detector tool isn’t detecting intent merely judging the output, yet that’s the word we use for it.

-

@Faraday said in AI Megathread:

Yeah at some point we can get bogged down in semantics.

One of many things linguists and computer scientists have in common.

-

@Trashcan Don’t really know about Bing and it’s implementation. What I do know is this:

completion = openai.ChatCompletion.create( model="gpt-3.5-turbo-16k", messages = [ {"role": "user", "content": "What is AresMUSH" } ], temperature=1.25, max_tokens=1024, top_p=1, frequency_penalty=0, presence_penalty=0 ) d = completion.choices print(d)gives me the following result:

[<OpenAIObject at 0x113f32450> JSON: { "index": 0, "message": { "role": "assistant", "content": "AresMUSH is a text-based roleplaying game engine that allows users to create and \ run their own roleplay games online. It is built on the MUSH (Multi-User Shared \ Hallucination) platform, which is a type of virtual world or multi-user dungeon. \ AresMUSH provides features and tools for creating and managing game settings, \ characters, and storylines, and allows multiple players to interact with each \ other in real-time through text-based chat and roleplay. It provides a flexible \ and customizable framework for creating a wide range of roleplaying settings \ and experiences." }, "finish_reason": "stop" }]It could be that the results @Faraday is reporting is because it’s an older model, something to do with how they set the variables for the question, or maybe there’s some other aspect to Bing’s implementation. I don’t really know.

Please note, I am not accusing anyone of lying. I’m just showing my results.

-

@Sage said in AI Megathread:

It could be that the results @Faraday is reporting is because it’s an older model

I’ve seen this behavior just using the basic chatGPT website. It’s not just related to AresMUSH either - many other folks have observed the same thing. It’s even the basis for various lawsuits against OpenAI.

The point isn’t that everything ChatGPT generates is copied verbatim from some other website. The issues run deeper than that.

-

@Pavel This is where I admit I’m not smart enough to give you a total answer, but it’s certainly more than just brand of card, because the non-reproducibility can be on the same machine across different sessions.

One of the home installs for Stable Diffusion has a sort of ‘setup test’ where they have you reproduce an image from their text settings: you should get the same picture as in the readme (it happens to be a famous anime girl, because naturally). And then it has some troubleshooting: if she looks wrong in this way, maybe you set this wrong. The images you get are never going to be totally unrelated, they’re similar, but they’re similar along a dimensionality that isn’t the way humans think, it’s similarity in the latent space which is how AI images are represented before they get translated to pixel format. So ‘similar’ might be the same character but in a different pose, or with an extra limb.

That difference feels egregious to a human viewer but might be mathematically quite close. This is why I chose to include that grid picture with my example because it demonstrates what small changes around fixed values can do. The ‘AI art process’ is a lot of this, looking for interesting seeds and then iteratively exploring around them with slight variations. The degree of fault on that xformers thing was generally within this range.

@tributary I’m really not here to argue philosophical things like ‘the value of art’ or what machine intelligence is compared to human intelligence (the ‘it doesn’t understand’ raised frequently in the thread). AI is a terrible term that we’ve just ended up stuck with for legacy reasons, but it tends to send these discussions off on tangents. My point was that many (maybe all) processes are replicable in theory, but the practicality is variable (taken out to the extremes, if we’re doing philosophical wanking, you get ‘does free will exist or are we just executing eons-old chemical reactions writ large?’, etc).

I’m not sure I’m totally convinced that the steps a person takes using AI software are inherently less valuable than the steps someone takes using Photoshop (and again, what if you use BOTH?). That doesn’t mean that I’m against ethical standards for the models, but I’m also not convinced that even the ‘careless’ ones like SD are really egregious ‘theft’ as they exist (finetune models are a different question altogether). They’re certainly no moreso than the work of patreon fan artists who sell work of copyrighted characters (and who are a LARGE part of the force advocating against AI).

-

@Faraday said in AI Megathread:

I’ve seen this behavior just using the basic chatGPT website. It’s not just related to AresMUSH either - many other folks have observed the same thing. It’s even the basis for various lawsuits against OpenAI.

I can’t really comment on any lawsuits since I don’t have all the necessary information. However, I will point out that just because a lawsuit exists doesn’t mean it’s very good. Judges tend to only throw out lawsuits when they are really, really bad. (N.B.: I’m also not saying that the basis for the lawsuit is bad. Just that the existence of a lawsuit is not a very good indicator of anything beyond a passing measure of merit).

I will also say that I am not maintaining that OpenAI has a right to just scrape up all this material and use it. That has to do with copyright law, though.

The point isn’t that everything ChatGPT generates is copied verbatim from some other website. The issues run deeper than that.

And I’m not trying to argue their are no issues with ChatGPT. I’m simply saying that I don’t think that the model, as described, necessarily falls into the classical definition of ‘plagiarism’. It does not look like it is really copying ideas from any particular source but instead is just sort of guessing how to string words together to answer a question, which is what any of us already do. It’s not really trying to pass off any information conveyed as ‘its own’ (though it certainly lacks attribution).

The fact that OpenAI is taking information from others and using it to profit (by using it to train the AI) does seem to be a problem, but then isn’t Google doing something similar? Of course Google does provide attribution through the link back and this provides a highly useful service to sites that allow Google to take their information, so the cases certainly aren’t identical.

I’m just saying I’m not sure that calling what it does ‘plagiarism’, at least in the classical sense, is the argument that should be made (N.B.: I am only talking about ChatGPT, not about any of the image generating routines, which probably work very differently from the LLM).

-

@Sage said in AI Megathread:

I’m just saying I’m not sure that calling what it does ‘plagiarism’, at least in the classical sense, is the argument that should be made

And yet we are disagreeing with you and providing reasons. I don’t think either party is going to convince the other, and we shall continue talking in circles.

@bored said in AI Megathread:

does free will exist or are we just executing eons-old chemical reactions writ large?

On this I do have an answer I quite like: Maybe it does, maybe it doesn’t, but I need to eat either way.

-

@Pavel said in AI Megathread:

And yet we are disagreeing with you and providing reasons.

Well, no, you aren’t actually providing reasons. You are moving the argument from the vernacular to specific minority case usage where it now becomes correct, and there’s nothing really wrong with that, provided you supply that context when you make the initial statement.

Now, if you supplied a reason why you were correct in the common vernacular and I missed it, I apologize. If there were statements made to the effect of ‘under the academic definition of plagiarism, what ChatGPT does is plagiarism’ (and by this I mean as the opening statement, not a supporting statement in a follow up post), then again, I apologize.

However, I have not seen you provide a single reason why you are disagreeing with me when the term is used in the ‘classical sense’ (which is what you have implied by taking that specific quote of mine and then stating you have supplied reasons).

Please note, I do not mean for this to come across as hostile. I am simply trying to point out that what you are trying to imply, at least to the best of what I can see, is not correct.

-

@Sage Technically, I said we are supplying reasons that we disagree, not that we’re correct. And while you may not intend your words to come across as hostile, they’re certainly marching towards arrogance whether intended or not.

I have, in fact, said that ChatCPT is incapable of plagiarism. It is incapable of thought entirely, much less the capacity for intent or making claims at all.

I would further argue, in fact, that plagiarism in the ‘classical sense’ is at least somewhat subjective. But if we are to use the definition you selected earlier, “to steal and pass off (the ideas or words of another) as one’s own” then yes, it does that too. Every “idea” it has is someone else’s. It doesn’t think. It merely reproduces, verbatim or in essence, others’ ideas. It cannot have ideas of its own.

ETA:

Further, I would say that an alternate Merriam-Webster definition suits the ‘classical sense’ better: present as new and original an idea or product derived from an existing source

-

@Sage

Bing is using GPT-4 and I can see in your snippet that you’re using GPT-3.5.Going back to my original post: “plagiarism” got dragged in here because in “the common vernacular” it’s used as a thing society mostly agrees is unethical. In the common vernacular, it has two parts: theft of words/ideas, and claim of those words/ideas as own’s own.

People can (demonstrably) argue until they are blue in the face about whether the first part is happening.

It is not arguable that if you copy/paste from ChatGPT or some other LLM, and do not disclose that you have done so, you’re doing part #2, not because ChatGPT wrote it but because you didn’t. I’m not going to name and shame because that’s not what this post is about, but that is already happening in our community.

In a community fundamentally based on reading and writing with other people, it should be an easy ask for transparency if that is not what’s occurring. People deserve to know when they’re reading the output of an LLM and not their well-written friend Lem.

-

@Trashcan said in AI Megathread:

It is not arguable that if you copy/paste from ChatGPT or some other LLM, and do not disclose that you have done so, you’re doing part #2

Absolutely true, however, blaming ChatGPT for that is like blaming the hammer that someone swings.

I’m not saying I think people should be using ChatGPT. I’m not saying that what OpenAI has done to create it is ‘ok’. I’m just addressing what seems to me to be a piece of misinformation floating around, that ChatGPT itself does nothing but plagiarism (in the common sense of the word).

-

@Sage said in AI Megathread:

that is like blaming the hammer that someone swings

Maybe. But to stretch the metaphor a little, ChatGPT is a warhammer rather than your bog standard tool hammer.

Whether intentionally designed that way or not, that’s how people are using it. And I think that matters a whole lot more than the exact definitions of words - I’m more a descriptivist anyway.

ETA: I’ll gladly step back from “ChatGPT plagiarises” verbiage if it can tell me where it gleaned whatever piece of information it’s currently telling me. Right now it’s incapable of actually citing its sources, even going so far as making them up.